Researchers have trained artificial intelligence in identifying schools of sandeel

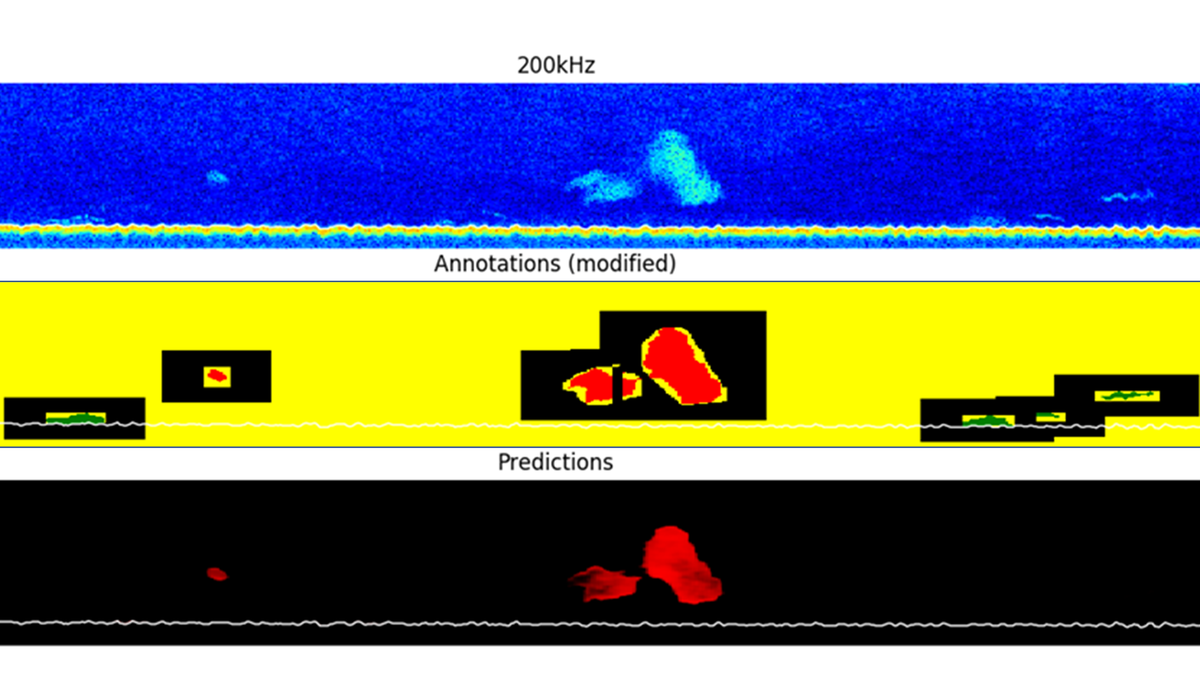

Example of echo sounder image (top), human tagged images where green is other fish, and what the AI sees as schools of sandeel (bottom).

Published: 11.05.2020 Updated: 15.05.2020

Echo sounders emit sound waves into the water and listen for their echoes.

Each species of fish has a unique echo signature, or frequency-response, which allows scientists to work out what fish they can see on the images.

Echo sounding is turned into fisheries advice

During surveys, scientists follow set routes and “count” fish using scientific echo sounders. This allows them to estimate the quantity of fish in the sea and give advice on how much can be harvested sustainably.

Interpreting and tagging the images is monotonous and time-consuming, but now researchers at the Institute of Marine Research (IMR) and the Norwegian Computing Centre have tried passing the job on to an artificial intelligence (AI). Their results have been published in a new article.

Many years of sandeel surveys fed in

“We have trained the AI using echo sounder images from 5 of the 11 years that we have been doing our annual sandeel survey. Our human colleague Ronald Pedersen had previously identified the fish and tagged all the images”, explains marine scientist Nils Olav Handegard.

“We then let the AI tag the remaining six years of echo sounder images. As a graduation examination.

Nearly a perfect clone of Ronald

On a scale of 0-10, the artificial intelligence scored 8.7 for its ability to distinguish between sandeel and other species, empty water and the sea bottom. The score was based on how closely it matched Ronald’s interpretation, which was considered the right answer.

The artificial intelligence works by converting the echo sounder data into shapes and colours in several stages. This process eventually gives it more abstract information about what it can see in the image.

Sandeel surveys provide a good starting point

In this case the task was to identify the categories sandeel, empty water and fish other than sandeel.

“The data from the sandeel surveys provide a great starting point for this. They have been categorised carefully by a single person, who has been looking for a specific species”, explains Handegard.

“By training the artificial intelligence to do this, we have in a sense coded Ronald’s knowledge digitally.”

AI will "take part" in this year's survey

On 23 April, our marine scientists set out on another acoustic survey of sandeel. This year, artificial intelligence will analyse the echo sounder data in parallel with Ronald.

“The aim isn’t to replace all humans, but to develop an artificial intelligence that is almost equally good and that can share the load. Particularly when we scale up our monitoring of resources in the future using autonomous vessels.”

Drones can perform real-time monitoring

The IMR has been testing autonomous sailing drones on precisely the sandeel survey.

“This is undoubtedly part of the future of data collection. It is cheap, environmentally friendly and scalable”, Handegard says.

“The raw data are too big to send back via satellite. But if the vessels are equipped with artificial intelligence to interpret what they’re seeing, they can send their results back in real time”, he says.

Learning without knowing the right answers

Researchers will continue to improve the artificial sandeel researcher, and they are working on expanding its use to other species and surveys.

“We also hope to start using so-called unsupervised learning. That involves not defining the correct answers that the artificial intelligence has to match, but rather giving it loads of data that it has to make sense of by itself. Then it can become even better than us. But that is for the future”, says Handegard.

Reference

Brautaset, Olav, Anders Ueland Waldeland, Espen Johnsen, Ketil Malde, Line Eikvil, Arnt-Børre Salberg and Nils Olav Handegard. "Acoustic classification in multifrequency echosounder data using deep convolutional neural networks." ICES Journal of Marine Science (2020). URL: https://doi.org/10.1093/icesjms/fsz235